Futures and Capabilities

In the Actor Model, concurrency is the default. Sequencing must by arranged explicitly. An important case of sequencing occurs when there is a data dependency between different parts of a system. One part produces a value that another part needs to perform its function.

One mechanism for sequencing data-dependent operations is to create a Future. This is sometimes known as a single-assignment data-flow variable (e.g.: in Mozart/Oz) [1]. It is basically a storage cell that can be written to only once, but read from multiple times. If a read occurs before the write, the read-reply is delayed until the write provides a value. This effectively suspends readers until the write occurs. Note, however, that there is no blocking in an actor system. The asynchronous reply to a read request is simply deferred until a value is available.Future

When an actor representing a Future is created, its value is unknown. In this state, it expects either a #read or a #write request.

LET future_beh = \msg.[ CASE msg OF (cust, #write, value) : [ BECOME value_beh(value) SEND SELF TO cust ] (cust, #read) : [ BECOME wait_beh(cust) ] END ]

When an actor with future_beh receives a #write request, it becomes bound to the value and sends its SELF to the customer cust. This serves as a synchronization signal, indicating that the value has been set.

#read request, it becomes waiting for the customer cust. Notice that there is no reply to the customer at this time.

Value

When a Future has been bound to a value, it expects only #read requests. Any other messages (including #write requests) are silently ignored.

LET value_beh(value) = \msg.[ CASE msg OF (cust, #read) : [ SEND value TO cust ] END ]

When an actor with value_beh receives a #read request, it simply sends its value to the customer cust.

Wait

When a Future receives #read requests before a value is provided by a #write request, the deferred readers are buffered in the waiting actor.

LET wait_beh(waiting) = \msg.[ CASE msg OF (cust, #write, value) : [ BECOME value_beh(value) SEND SELF TO cust SEND waiting TO NEW broadcast_beh(value) ] (cust, #read) : [ BECOME wait_beh(cust, waiting) ] END ]

When an actor with wait_beh receives a #write request, it becomes bound to the value and sends its SELF to the customer cust. Now that a value is available, it creates a broadcast actor to send replies to all the waiting readers.

#read request, it adds the customer cust to the list of deferred readers.

Broadcast

A Broadcast actor is created specifically to send a value to a list of customers. A Future uses a Broadcast actor to deliver replies to deferred readers.

LET broadcast_beh(value) = \waiting.[ CASE waiting OF (first, rest) : [ SEND value TO first SEND rest TO SELF ] last : [ SEND value TO last ] END ]

An actor with broadcast_beh is created with a value. When it receives a waiting list, it uses pattern matching to determine if the list is compound, or just a single value. If the list is compound (a pair), it sends the value to the first customer, and sends the rest of the waiting list back to itself. If the list is just a single value, it simply sends the value to the last customer.

Once the value has been delivered to all the waiting customers, there will be no further references to the Broadcast actor, so it can be reclaimed by the garbage-collector.Example

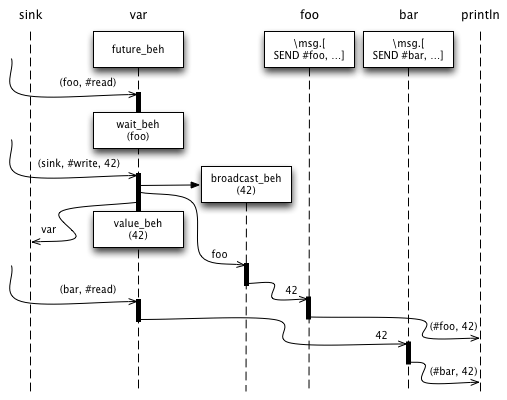

The following example demonstrates the operation of a Future receiving concurrent #read and #write requests. We create two customers, foo and bar, which simply label any message they receive and send it to the console (via println). These customers are used in two concurrent #read requests for the Future var. A concurrent #write request sets the value of the Future to 42. The customer for the #write request is sink (which ignores all messages) because, in this case, we don’t care about when the value is set.

CREATE var WITH future_beh CREATE foo WITH \msg.[ SEND (#foo, msg) TO println ] CREATE bar WITH \msg.[ SEND (#bar, msg) TO println ] SEND (foo, #read) TO var SEND (bar, #read) TO var SEND (sink, #write, 42) TO var

Since the #read and #write requests are issued concurrently, the Future var will receive them in a non-deterministic order. The #write request could arrive first, in which case the #read requests will be satisfied immediately. The #read requests could both arrive before the #write, in which case the read-replies will be deferred until the value is set by #write. Or, the #write request could arrive between the two #read requests, as shown in Figure 1.

Capability

Access to a Future, as described above, implies the capability [2] to #read or #write its value. In many cases we would like to separate those capabilities. We may want to give read-only access to several potential consumers, while reserving write access for the producer. One powerful feature of pure-actor systems is that Actors act as security capabilities. In order to attenuate the capability of a client, we simply create a proxy actor that enforces our desired security policy.

LET read_only_beh(future) = \msg.[ CASE msg OF (cust, #read) : [ SEND msg TO future ] END ] CREATE ro_var WITH read_only_beh(var)

An actor with read_only_beh is created with a reference to a future. When a #read request is received, the message is forwarded to the future. All other messages are silently ignored.

#write request by sending its SELF back to the customer. Our proxy must intercept this response and substitute its own identity to maintain encapsulation of the future.

LET write_sync_beh(cust, msg) = \_.[ SEND msg TO cust ] LET write_only_beh(future) = \msg.[ CASE msg OF (cust, #write, value) : [ SEND (k_write, #write, value) TO future CREATE k_write WITH write_sync_beh(cust, SELF) ] END ] CREATE wo_var WITH write_only_beh(var)

When an actor with write_only_beh receives a #write request, it sends a #write request to the future, providing a customer k_write with write_sync_beh. When the future responds, k_write sends the identity of the proxy to the original customer cust.

Race

Sometimes it is useful to arrange a race among multiple producers. You may have multiple algorithms competing to provide a result. Different algorithms may win under different circumstances. The first one to determine a result will #write to the Future. Subsequent results are ignored. In this case, the write synchronization signal is unimportant, so we change the protocol to expect a #result message with no customer. This behavior acts like an adapter between the racers (sending #result messages, expecting no reply) and the future (expecting #write requests and sending a synchronization reply).

LET result_race_beh(future) = \msg.[ CASE msg OF (#result, value) : [ SEND (SELF, #write, value) TO future BECOME \_.[] ] END ]

When an actor with result_race_beh receives a #result message, it sends a #write request to the future with the result value and its SELF as the customer. Then it becomes a behavior that ignores all subsequent messages. Note that this serves two purposes; to ignore any other race results, and to throw away the write synchronization signal.

Summary

Futures are a fundamental synchronization mechanism in concurrent systems. We have shown how futures work through an actor-based description, illustrating the events that transition a Future through its life-cycle. Building on that foundation, we have demonstrated how, following the Object Capability Model, we can exercise fine-grained control over access to the Future. This is extended to the case of arranging a race among concurrent speculative computations.

References

- [1]

- P. Van Roy, S. Haridi. Concepts, Techniques, and Models of Computer Programming. MIT Press, 2004.

- [2]

- J. Dennis, E. Van Horn. Programming Semantics for Multiprogrammed Computations. ACM Conference on Programming Languages and Pragmatics, San Dimas, CA, August, 1965.

Tags: actor, adapter, asynchronous, blocking, capability, concurrency, data-flow, deadlock, dependency, future, promise, protocol, proxy, security, sequence, synchronization, value

Hi Dale,

why does the broadcaster message itself instead of “BECOME shorter”? My version (should be correct… hope so… feel like taking an exam… :)

Perhaps I have an explanation: this erlang style “tail recursive cycle” may last long, the waiting list can have thousands and thousands of members. Actors are only supposed to do small pieces of computation at any time, kinda “atomical shots” of CPU bursts between comparably much longer IO waits. With BECOME, this actor gets blocked, ie. it can’t serve messages any longer.

The problem with this explanation is: who cares, we don’t want to do anything with this particular actor. Ok, if the implementation is some kinda cooperative multitasking, a cycling actor can block the whole program, but I feel the real (conceptual) target machine of a language like this should have millions of (rather cheap and not particularly smart) CPUs with lots and lots of some serial type of interconnections. So no one gets hurt if broadcast_beh blocks a single CPU.

Huh, hope i wasn’t completely irrelevant to the question…

Oops, now I understand… There’s no “tail recursion” in Humus, the BECOME starts a new listening cycle. In erlang, you have to do explicit tail recursion, otherwise the process exits. Apparently, in Humus, it is up to the garbage collector.